Getting a change made in the code to live isn’t as simple and straightforward as it was. A few years back live editing with an FTP client was common. Nowadays, we have a wide range of more sophisticated methods to choose from that solves issues like multiple servers and working with Version Control that FTP can’t. But what method to choose? Specially if you have no experience in that field. In this article I will try to explain the how, pro’s and con’s of each of the available methods. And how to make a decision without any DevOps or hosting experience.

This blog was written by Sander Mangel, Freelance Technical E-commerce Consultant for online retailers and agencies.

Table of content:

- Atomic deploys

- Build deploys

- How do I choose the right method?

- Example: A simple GIT based blue green deploy approach for Hypernodes

Is this article for you?

If you are vaguely familiar with terms like GIT, SSH and composer this article should be for you. If not, and you are interested in getting a deeper knowledge of the technical side, I can recommend reading up on it or asking a developer, consultant or someone else with a technical background.

This is by no way a complete manual to deploying. Nor is it an in-depth technical evaluation of the various methods. It’s my take on deploy strategies, the way we get code from a version control repository to a server, and on how to decide which method best suits your needs. The topic was first discussed in the Dutchento slack and some of the feedback in that thread I’ve also used in this article.

We’ll split up the ways to deploy in two groups, the atomic deploys, and the builds. But first; some terms:

- GIT / Version Control / VCS – software that versions code. This allows for a history and to roll back to a previous version of a file.

- Commit – when changes are made to code these are committed to GIT. A commit is a snapshot of those changes at that moment and a next commit could contain changes to the same files captured in a new snapshot.

- Repository – a GIT repository is a collection of all the files that are in GIT with all its commits.

- SSH – access via internet to a server to run actual commands and applications like you would on a normal computer.

- DevOps – the domain that covers both knowledge of hosting, and how an application runs on that hosting. Bridging the gap between a regular server administrator and a developer.

- FTP – a way of connecting through a server to allow file editing and transfer that has been around for a very long time.

- Composer – an application that maintains libraries of 3rd party code in PHP projects allowing for easy updating and adding of libraries.

- Compiling – taking human made and readable files and transforming them into a different format of file that is easier to run for a server or browser.

- Docker – a technology that allows for running virtual servers replicating the exact desired configuration for that server.

- Linux – an operating system like Windows that most servers run on.

Atomic deploys

This type of deployment takes the files that were changed and deploys them to the target server(s). We can either do this using a tool that pushes the files or by using GIT on the target server and pulling in the changes. Files are added or removed on the environment but different to build deploys the environment itself never refreshed. This means you can manually add files and directories but it also means this process has no real procedure for quickly setting up a new environment. This will take a lot of manual steps.

Option 1: Pulling in via GIT

Directly using GIT is the easiest way to do this. GIT can be a self hosted instance or one of the popular hosted solutions such as Bitbucket, GitHub and Gitlab.

Run a git clone do the servers public directory to fetch your files the first time, and on consecutive runs all that is needed is a git pull to update to the latest version.

Upside of pulling in via GIT

- easy to set up and maintain

- easy to run

- since it’s version control it can be rolled back to a previous version

Downside of pulling in via GIT

- needs manual actions like the actual git pull command and any other post-deploy tasks

- does not scale well over multiple servers as you need to log in to each server for the git pull.

Option 2: Pushing via a tool

Using a tool to deploy makes life a bit more easy compared to using GIT. Generally, it would be automated and would easily deploy to either one or more servers. After giving the tool access to your repository and SSH access to your server(s) you would be good to go. Some of the tools are DeployHQ or DeployBot.

Upside of pushing via a tool

- automated and easy to maintain

- scales well for multiple servers

Downside of pushing via a tool

- needs configuring

For both of these methods, the downtime is generally quite long. First of all, while the files are being uploaded one at a time the applications state is undetermined which means it should not be reachable for users. Next to that, you will need to run quite a number of post deploy commands either manually, or through the tool you are using on the servers. All this time keeping the application in maintenance mode.

Especially for Magento 2 between composer updates, generating static content, compiling less, flushing cache and so on it could quickly run up to 30 minutes or more.

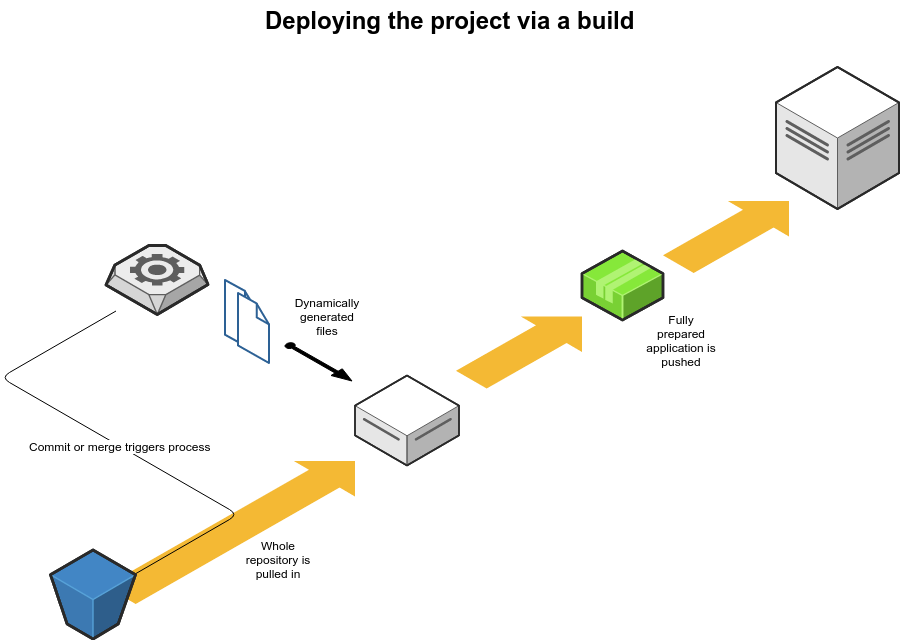

Build deploys

The second option is “building” the application before deploying. This method generally leads to less downtime, scales well and gives a better insurance of a correct end result as you can test your build before putting it live.

Building means getting the contents of the repository, adding any dynamically created files the application might need like, and running the commands usually run post deploy (or at least a number of them) making sure the application is ready to go. Here we are literally ‘building’ up the application.

After that, all files are added to a zip or tar archive, called an “artifact”, which is then put on the target servers and unpacked. Now all that is need is running the database migration making the total downtime seconds or less.

A build deploy has many variations for each step in the process. Too many to describe all of them but I’ll outline what each step does and give a few tool suggestions.

The steps to follow

Version control system. Builds generally start with your version control system, which would most likely be GIT either self-hosted or via Bitbucket, Github, or Gitlab. Either through a manual action or via an automated trigger like a merge of branches or a push of code the process would be kicked off. A true build project runs from start to finish without any human intervention required. This means building the code, provisioning the infrastructure like a server, and set any configuration on application level.

The building itself. Next comes the building itself. This is something for which you will need to choose a tool, generally a hosted one. CodeShip is a well known one, or Amazons CodeDeploy, but bitbucket pipelines also does the trick very well.

The build itself roughly follows these steps:

- A virtual server is set up for the building process for example by running a Docker container. On this server, the build will take place.

- Code is pulled in from your GIT repository.

- Various dynamic files might be added such as the env.php or local.xml for Magento.

- Code is compiled, classes generated.

- All code is then packaged and sent off to either the server or an intermediate tool that puts it on the server.

The package should contain everything on application level meaning it can run on any server that has PHP. Creating a new environment should be as simple as running a build with a new target server.

Some of these steps, generally 1, 2 and 5, are automated. 2, 3 and 4 will be something you design yourself. This works by having a file that contains commands that are fired off one after the other. If one fails, the build fails. Think of it as a list of commands the server should run or the command you used to enter on old DOS computers.

Deployment. Now that we have a little package with the application, or the “artifact”, we can deploy this on the destination server. For this the tool will have SSH access. It will use this to copy the artifact to the server, unpack it and then run various SSH commands you might have specified for after the build.

Think of the actual database migration which updates any database tables, or a cache flush. Depending on your hosting environment this is done on one or many servers. It scales indefinitely.

Red/blue deploy. An extra step in this process can be a red/blue deploy. In this scenario, we deploy the new application to a different directory on the server, or even a different server. Optionally this version is tested either manually or automated to make sure the deploy was successful. Then the current live version is switched for the new version in milliseconds, eliminating almost all downtime. If the code in production doesn’t work as expected rolling back to the previous version can be done in seconds. If changes to the database were involved it requires you to have the proper procedures to undo them. This will be added to Magento in 2.3 thanks to the “declarative schema”.

Upside of build deploys

- needs minimal intervention to run eliminating human error

- limits downtime as everything is prepared offsite and only database migration has to run.

- can also handle complex deploy procedures like, for example, deploying to a Docker-ized server

- new environments can easily and quickly be set up.

Downside of build deploys

- the deploy process takes quite some time no matter the size of the change

- it requires considerable DevOps knowledge to set up

How do I choose the right method?

Picking a method that best suits you comes down to both the DevOps knowledge you have inhouse or are willing pay for as goes for the tools that are required.

The most basic setup

The most basic setup will be using GIT. This does not require any extra tools and limited knowledge. The commands needed to run after pulling in the files can be saved in a small script you run each time making the whole process only a few steps. Below you will find an example of how to run deploys with GIT for Magento 2 on a Hypernode that even includes a blue green deploy.

This approach will work fine for smaller shops where deploys happen not too often and downtime is acceptable. Any developer can work with this methodology making it easier to find an agency or freelancer for the odd job.

Going a bit more advanced

For shops that have more frequent development cycles and who want to automate the process to, let’s say, also deploy new changes during development to an acceptance server an approach involving some basic tools might be a good option. Tools like DeployHQ are fairly simple to set up and do not cost too much. Since it’s automated the developer does not have to think about any steps to do when deploying other than checking if it went well.

Especially for Magento 2 there will be downtime since, after the code is deployed, several tasks need to run that take considerable time.

For more demanding shops that want to do it right

If only limited to no downtime is acceptable, the shop runs on a cluster of servers or you want to be absolutely sure the production, acceptance and other environments are the same then build deploys are the way to go. This does however require DevOps knowledge which generally doesn’t come cheap. Next to that the deploy setup will require the odd maintenance and monitoring. You will find many agencies already offer deploy methods like this for their project which would limited the set up cost but does create a vendor lock-in you should be aware of.

Example: A simple GIT based blue green deploy approach for Hypernodes

Though theoretically you could do this on other hosting providers please note this will need changes in the scripts and steps. I recommend asking a developer with a basic knowledge of Linux to help with the following. The steps related to Magento 2 may differ per version and setup. Please consult your developer on the exact steps.

The following commands are run from the destination server. Login via SSH and go into the /data/web/public directory.

Make sure to give the hypernode access to the repository by generating an SSH key and adding it as read only deploy key to your repository.

First we will check out the latest code from the GIT repository. Instead of simply doing a git pull command we take some extra steps that first off all make sure we are on the master branch, and then pull in any changes without taking into account any possibly changed files on the server as there should be none.

$ git checkout master

$ git fetch --all

$ git reset --hard origin/master

Now that we have the latest code we will proceed with the steps that build the Magento 2 environment. This steps would be different for Magento 1 or any other platform.

We’ll start off with putting Magento into maintenance mode blocking any user from entering the store. Then we pull in Magento itself and required libraries via composer and clear all temporary file directories.

Now we disable all modules, enable them again en disable per module what we won’t need. This will make sure the modules are properly initialized.

Last we set the mode to production, compile several files and run config import and database migration.

When done we release the shop to the public again.

$ magento maintenance:enable

$ composer install --no-ansi --no-dev --no-interaction --no-progress --no-scripts --optimize-autoloader

$ rm -r var/cache/*

$ rm -r var/log/*

$ rm -r var/view_preprocessed/*

$ rm -r pub/static/_requirejs/*

$ rm -r pub/static/adminhtml/*

$ rm -r pub/static/frontend/*

$ rm -r generated/*

$ bin/magento module:disable -c --all

$ bin/magento module:enable --all --clear-static-content

$ bin/magento module:disable Magento_Authorizenet

$ bin/magento module:disable Magento_Cybersource

$ bin/magento module:disable Magento_Dhl

[... any other modules you do not use in Magento 2 ...]

$ bin/magento deploy:mode:set production

$ bin/magento setup:static-content:deploy [locale code]

$ php -d memory_limit=-1 bin/magento setup:di:compile

$ bin/magento app:config:import --no-interaction

$ bin/magento setup:upgrade --keep-generated

$ magento maintenance:disable

This will take anywhere from 10 to 30 minutes or more in general. To prevent much of this downtime we will take these steps and move them to a blue green deploy strategy which means we’ll build the environment first before actually adding it to the public directory.

Blue green deploy

First we’ll start using bash scripts instead of manual commands. A bash script is nothing more than a text file with a line that tells linux it is a bash file and a list of commands to execute.

We’ll assume this is a server not yet in production as we need to prepare it a bit.

First of all we remove the public directory:

$ rm -Rf /data/web/public

Next we create a directory for all the dynamic, environment specific files.

$ mkdir -p /data/web/templates/public/media

$ mkdir -p /data/web/templates/staging/media

Note that we make one for both staging and public as both have their own files.

For Magento 2 we place here the env.php file for example which contains the database credentials.

But this is also where we keep the actual media directory as we don’t want to throw this one away between deploys.

Create in /data/web/ the file build.sh

#!/usr/bin/env bash

TARGET=$1 #target directory

SOURCEDIR=`date '+%Y_%m_%d__%H_%M_%S'`; #directory to work from

GITBRANCH=$2

git clone --single-branch -b "$GITBRANCH" git@github.com:teamname/your-repo.git "$SOURCEDIR"

cd $SOURCEDIR

# run required commands to build Magento 2

composer install --no-ansi --no-dev --no-interaction --no-progress --no-scripts --optimize-autoloader

bin/magento deploy:mode:set production

bin/magento setup:static-content:deploy [locale code]

php -d memory_limit=-1 bin/magento setup:di:compile

# END specific Magento 2 commands

# we create a build.txt to verify success via http

GITHEAD=$(git rev-parse --short HEAD)

echo "Build: $SOURCEDIR" >> build.txt

echo "Commit: $GITHEAD" >> build.txt

# cleanup any unused files

rm -Rf .git

# place the environment specific files and media

cp "/data/web/templates/$TARGET/env.php" app/etc/env.php

ln -s "/data/web/templates/$TARGET/media" ./pub/media

cd /data/web/

echo "pointing $(pwd)/$TARGET to $(pwd)/$SOURCEDIR"

rm -f $TARGET

ln -s "$(pwd)/$SOURCEDIR" "$(pwd)/$TARGET"

# any database related steps we'll do once we linked it to the public dir

cd "$(pwd)/$TARGET"

# enable maintenance mode

bin/magento maintenance:enable

# import config and migrate database

bin/magento app:config:import --no-interaction

bin/magento setup:upgrade --keep-generated

$ disable config

bin/magento maintenance:disable

Now give the file the right permissions $ chmod +x build.sh , and we are done with the most technical part.

Running this script will get the full repository from GIT, build the required variables in this separate directory and only when it is ready swap it with the live environment limiting downtime.

As a bonus the previous version will still be available in its own directory and in case you need to roll back it will be as easy as pointing public back to that directory.

This can be done for both the live, public, site as well as the staging environment on Hypernode.

Now lets deploy the GIT master branch to live.

$ ./build.sh public master

Or our accept branch to staging.

$ ./build.sh staging accept

Hi! My name is Dion, Account Manager at Hypernode

Want to know more about Hypernode's Managed E-commerce Hosting? Schedule your online meeting.

schedule one-on-one meeting +31 (0) 648362102